Note: This is a reblog from the OKFN Science Blog.

It’s hard to believe that it has been over a year since Peter Murray-Rust announced the new Panton fellows at OKCon 2013. I am immensly proud that I was one of the 2013/14 Panton Fellows and the first non UK-based fellow. In this post, I will recap my activities during the last year and give an outlook of things to come after the end of the fellowship. At the end of the post, you can find all outputs of my fellowship at a glance. My fellowship had two focal points: the work on open and transparent altmetrics and the promotion of open science in Austria and beyond.

Open and transparent altmetrics

The blog post entitled “All metrics are wrong, but some are useful” sums up my views on (alt)metrics: I argue that no single number can determine the worth of an article, a journal, or a researcher. Instead, we have to find those numbers that give us a good picture of the many facets of these entities and put them into context. Openness and transparency are two necessary properties of such an (alt)metrics system, as this is the only sustainable way to uncover inherent biases and to detect attempts of gaming. In my comment to the NISO whitepaper on altmetrics standards, I therefore maintained that openness and transparency should be strongly considered for altmetrics standards.

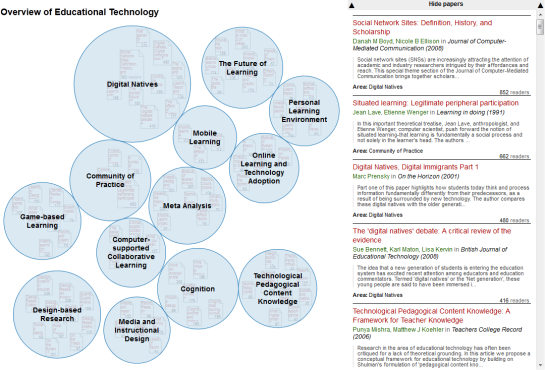

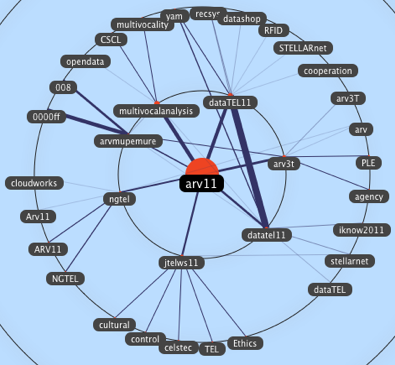

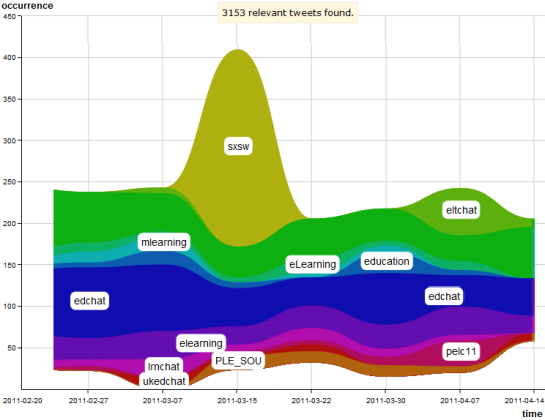

In another post on “Open and transparent altmetrics for discovery”, I laid out that altmetrics have a largely untapped potential for visualizaton and discovery that goes beyond rankings of top papers and researchers. In order to help uncover this potential, I released the open source visualization Head Start that I developed as part of my PhD project. Head Start gives scholars an overview of a research field based on relational information derived from altmetrics. In two blog posts, “New version of open source visualization Head Start released” and “What’s new in Head Start?” I chronicled the development of a server component, the introdcution of the timeline visualization created by Philipp Weißensteiner, and the integration of Head Start with Conference Navigator 3, a nifty conference scheduling system. With Chris Kittel and Fabian Dablander, I took first steps towards automatic visualizations of PLOS papers. Recently, Head Start also became part of the Open Knowledge Labs. In order to make the maps created with Head Start openly available to all, I will set up a server and website for the project in the months to come. The ultimate goal would be to have an environment where everybody can create their own maps based on open knowledge and share them with the world. If you are interested in contributing to the project, please get in touch with me, or have a look at the open feature requests.

Evolution of the UMAP conference visualized in Head Start. More information in Kraker, P., Weißensteiner, P., & Brusilovsky, P. (2014). Altmetrics-based Visualizations Depicting the Evolution of a Knowledge Domain 19th International Conference on Science and Technology Indicators (STI 2014), 330-333.

Promotion of open science and open data

Regarding the promotion of open science, I teamed up with Stefan Kasberger and Chris Kittel of openscienceasap.org and the Austrian chapter of Open Knowledge for a series of events that were intended to generate more awareness in the local community. In October 2013, I was a panelist at the openscienceASAP kick-off event at University of Graz entitled “The Changing Face of Science: Is Open Science the Future?”. In December, I helped organizing an OKFN Open Science Meetup in Vienna on altmetrics. I also gave an introductory talk on this occasion that got more than 1000 views on Slideshare. In February 2014, I was interviewed for the openscienceASAP podcast on my Panton Fellowship and the need for an inclusive approach to open science.

In June, Panton Fellowship mentors Peter Murray-Rust and Michelle Brook visited Vienna. The three-day visit, made possible by the Austrian Science Fund (FWF), kicked off with a lecture by Peter and Michelle at the FWF. On the next day, the two lead a well-attended workshop on content mining at the Institute of Science and Technology Austria.The visit ended with a hackday organized by openscienceASAP, and an OKFN-AT meetup on content mining. Finally, last month, I gave a talk on open data at the “Open Science Panel” on board of the MS Wissenschaft in Vienna.

I also became active in the Open Access Network Austria (OANA) of the Austrian Science Fund. Specifically, I am contributing to the working group “Involvment of researchers in open access”. There, I am responsible for a visibility concept for open access researchers. Throughout the year, I have also contributed to a monthly sum-up of open science activities in order to make these activities more visible within the local community. You can find the sum-ups (only available in German) on the openscienceASAP stream.

I also went to a lot of events outside Austria where I argued for more openness and transparency in science: OKCon 2013 in Geneva, SpotOn 2013 in London, and Science Online Together 2014 in Raleigh (NC). At the Open Knowledge Festival in Berlin, I was session facilitator for “Open Data and the Panton Principles for the Humanities. How do we go about that?”. The goal of this session is to devise a set of clear principles which describe what we mean by Open Data in the humanities, what these should contain and how to use them. In my role as an advocate for reproducibility I wrote a blog post on why reproducibility should become a quality criterion in science. The post sparked a lot of discussion, and was widely linked and tweeted.

What’s next?

The Panton Fellowship was a unique opportunity for me to work on open science, to visit open knowledge events around the world, and to meet many new people who are passionate about the topic. Naturally, the end of the fellowship does not mark the end of my involvement with the open science community. In my new role as a scientific project developer for Science 2.0 and open science at Know-Center, I will continue to advocate openness and transparency. As part of my research on altmetrics-driven discovery, I will also pursue my open source work on the Head Start framework. With regards to outreach work, I am currently busy drafting a visibility concept for open access researchers in the Open Access Network Austria (OANA). Furthermore, I am involved in efforts to establish a German-speaking open science group

I had a great year, and I would like to thank everyone who got involved. Special thanks go to Peter Murray-Rust and Michelle Brook for administering the program and for their continued support. As always, if you are interested in helping out with one or the other project, please get in touch with me. If you have comments or questions, please leave them in the comments field below.

All outputs at a glance

Head Start – open source research overview visualization

Blog Posts

- “It’s not only peer reviewed, it’s reproducible”

- Open and transparent metrics for discovery

- New version of open source visualization Head Start released

- What’s new in Head Start?

- All metrics are wrong, but some are useful

Audio and Video

- Panton Fellows introduction at OKCon 2013

- Panel “Science in a time of change – Is Open science the future?” [German]

- Podcast Open Science in Research [German]

- Introduction to Open Research Data as part of Open Science Panel Vienna [German]

Slides

- Introduction to Altmetrics @ OKFN Austria Open Science Meetup

- Open Data and the Panton Principles for the Humanities – How do we go about that? @ Open Knowledge Festival 2014

- Altmetrics-based Visualizations Depicting the Evolution of a Knowledge Domain @ Science and Technology Indicators 2014

- Open Data @ Open Science on board the MS Wissenschaft [German]

Reports

- My objectives as a Panton Fellow

- First quarterly report on my Panton Fellowship activities

- Second quarterly report on my Panton Fellowship activities

- The third quarter of my Panton Fellowship in the rear view mirror

- Open data and the Panton Principles in the humanities