I haven’t blogged lately, mostly due to the fact that I was busy moving to London. I will be with Mendeley for the next four months in the context of the Marie Curie project TEAM. My first week is over now, and I have already started to settle in thanks to the great folks at Mendeley, who have given me a very warm welcome!

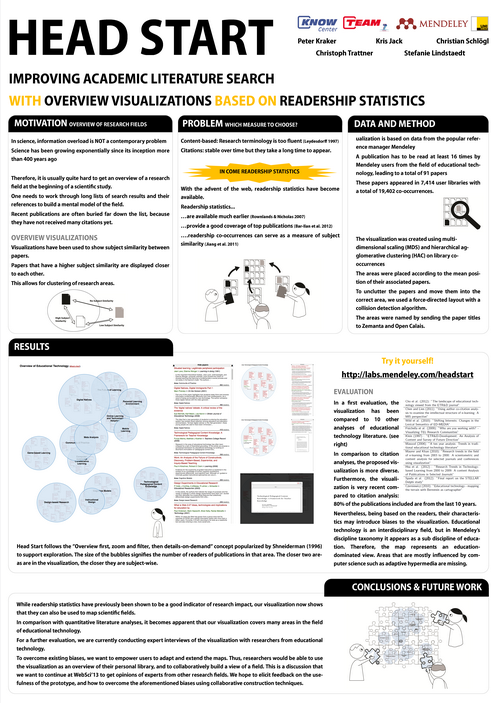

My secondment at Mendeley will focus on visualizing research fields with the help of readership statistics. A while ago, I blogged about the potential that readership statistics have for mapping out scientific fields. While these thoughts were on a rather theoretical level, I have been taking a more practical look at the issue in the last few months. Together with Christian Körner, Kris Jack, and Michael Granitzer, I did an exploratory first study on the subject. This resulted in a paper entitled “Harnessing Usage Statistics for Research Evaluation and Knowledge Domain Visualization” which I presented at the Large Scale Network Analysis Workshop at WWW’2012 in Lyon.

The problem

The problem that we started out with is the lacking overview of research fields. If you want to get an overview of a field, you usually go to an academic search engine and either type in a query or, if there has been some preselection, browse to the field of your choice. You will then be presented with a large number of papers. You usually pick the most popular overview article, read through it, browse the references, and look at the recommendations or incoming citations (if available). You choose which paper to read next and repeat. Over time, this strategy allows you to build a mental model of the field. Unfortunatly, there are a few issues with this approach:

- It is very slow.

- You never know when you are finished. Even with the best search strategy, you might still have a blind spot.

- Science and Research are growing exponentially, making it very hard to not only get an overview, but also to keep it.

In come visualizations

Below you can see the visualization of the field of Technology Enhanced Learning developed in the exploratory study for the LSNA workshop. Here is how you read it: each bubble represents a paper, and the size of the bubble represents the number of readers. Each bubble is attributed to a research area denoted by a color – in this case either “Aadaptive Hypermedia” (blue), “Game-based Learning” (red), or “Miscellaneous” (yellow). The closer that two papers are in the visualization, the closer they are subject-wise. Morevoer, the further a paper is to the center of a field, the more central it is for that field. If you click on the visualization, you will get to a HTML5 version built with Google Charting Tools. In this interactive visualization, you can hover over a bubble to see the metadata of the paper.

Usually, visualizations like this one are based on citations. Small defined co-citations as a measure of subject similarity. The more often two authors or publications are being referenced in the same publication, the closer they are subject-wise. Using this measure in connection with multi-dimensional scaling and clustering, one can produce a visualization of a field. The co-citation measure is empirically well validated and has been used in hundreds, if not thousands of studies. Unfortunately, there a problem inherent of citations: they take a rather long time to appear. It takes three to five years before the number of incoming citations reaches its peark. Therefore, visualizations based on co-citations are actually a view of the past, and do not reflect recent developments in a field.

How to deal with the citation lag?

In the last few years, usage statistics have been a focus for research evaluation (see the altmetrics movement for example), and in some cases also visualizations. Usage statistics were not available, at least not on a large scale, prior to the web and tools such as Mendeley. One of the advantages of usage statistics in comparison to citations is that they are earlier available. People can start reading the paper immediately after publication, and in the case of pre-prints even before that. The measure that I used to produce the visualization above is the co-occurrence of publications in Mendeley libraries. Much like the possibility that two books, which are often rented from the library together, are of the same or a similar subject is high, the co-occurrence in libraries is taken as a measure of subject similarity. I took the Technology Enhanced Learning thesaurus to derive all libraries from that field. I then selected the 25 most frequent papers, and calculated their co-occurrences.

As this was our first study, we limited ourselves to only libraries from the field of computer science. As you can see, we were able to derive two areas pretty well: adaptive hypermedia, and game-based learning. Both are very important for the field. Adaptive hypermedia is a core topic of the field, especially with computer scientists; game-based learning is an area that has received a lot of attention in the last few years and continues to be of great interest for the community. You will also have noticed that there is a huge cluster labelled “Miscellaneous”. These papers could not be attributed to one research area. There are several possible reasons for this cluster: the most likely is that we did not have enough data. Another explanation is that Technology Enhanced Learning is still a growing field, with diverse foci, which results in a large cluster of different publications. Furthermore, we expect readership to be less focused than citations. This has on the one hand the possibility to show more influences to a field than citation data would, on the other hand too little focus will result in fuzzy clusters. To clarify these points, I am looking at the at the moment at a larger dataset, which includes all disciplines related to TEL (such as pedagogy, psychology, and sociology). Moreover, I am keen to learn more about the motivation of adding papers to one’s library.

In my view, visualizations based on co-readership bear a great potential. They could provide timely overviews, and serve as naviagtional instruments. Furthermore, it would be interesting to take snapshots from time to time to see the development of a field over the years. Finally, such visualizations could be useful to shed light on interdisciplinary relations and topical overlap between fields. These issues, and their relations to semantics will be the topic of another blogpost though. For the time being, I am curious about your opinions on the matter. How do you see visualizations? Could they be useful for your research? What would you like to be able to do with them in terms of features? I am looking forward to your opinions!

Citation

Peter Kraker, Christian Körner, Kris Jack, & Michael Granitzer (2012). Harnessing User Library Statistics for Research Evaluation and Knowledge Domain Visualization Proceedings of the 21st International Conference Companion on World Wide Web , 1017-1024 DOI: 10.1145/2187980.2188236